Test Server Puzzle

Feb 2 2011, 11:14PM

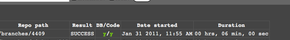

I recently had the opportunity to rebuild our test server system for QA testing at work, and I found a few solutions that I thought worth sharing. The finished system centers around a custom console for reserving test servers, launching repository checkouts and/or database reloads, and viewing a short history of configurations for that server. See the screenshot below.

The Need

For the last 6 months plus we had been running no more than 4 test servers on an old machine without hardware virtualization support. Any attempt to run a 5th virtual server would likely wedge the machine. This was by no means sufficient for QA needs. The first step was getting a new host machine (we went with a Dell PowerEdge R610). Next was choosing the toolset. I started with KVM, got a base CentOS image running with the virt-install command, and moved on to puppet mastering.

Puppet configuration management

Puppet has been a huge win for us in general over the last several months. In particular, with the 4 test servers on the old system I was already hopelessly out of sync with the configurations (they should have been identical systems). I already had most of the package configuration written for other application environments - it took just a little tweaking and node regular expressions to get 90% of the configuration set for all of the new servers.

Our stack is basically Linux, Apache/mod_wsgi, Django, virtualenvs and Postgres. Puppet now manages just about all of that and quite a bit more. The only pieces I didn't feel I could manage through Puppet were repository checkouts, and the settings files that are server and application specific: apache.conf, wsgi and the django settings file.

Database

We have large databases. We are working on scripts to trim those down to smaller QA sets, but for the time being we test on full copies. On the old test servers, getting a fresh database meant an scp for the dmp file, and then a pg_restore. For our largest DB this process was known to take nearly two hours. With the new setup I opted for a separate, non-virtualized db host. I had already seen that copydb with the --template flag was much faster than a pg_restore. In addition I reasoned that since the virtual test servers wouldn't have an abundance of RAM (1GB each) it would be more efficient to let a dedicated machine handle the databases.

We now have one nightly job that transfers the dmp file from our backups, and runs pg_restore. For the rest of the day copies of those databases are made on the database server as needed. The "base" databases can't be used normally - that's the one caveat to the "copydb" plan. They just sit there to support copying.

The Console

My design process was completely centered on "the console," and what would be ideal to have there in support of developer and QA needs. It runs on the virtual server host - and I made an effort to make it as lean as I could. It consists of a web.py script (served by apache/cgi) with Python pickles for storage, and a front end built with Backbone and Mustache. The web.py script has very limited access to virsh commands on the backend (currently just 'list' to get the names and status of each virtual machine), and basically only serves JSON (plus the initial HTML page that links to javascript and CSS files).

There are two "external" jobs that support our test servers. One is the nightly job that runs scp and pg_restore. The second job is touched off by developers at the console. They choose the app, the repository path, and either a DB load, a code checkout, or both (see the screenshots below). The "build" job has to function within a queue to avoid the problem of two developers trying to copy the same "base" DB at the same time.

The supporting scripts for the "build" job are the bash script on the DB server that runs copydb, another bash script on the virtual test server that runs the svn checkout, and a python script on the virtual test server that uses Django templates to render configuration files (apache conf, wsgi file, and django settings). I thought using Django templating made a lot of sense: Django has to be on all the servers to support our apps, and developers are already well familiar with django templates in general.

A step in the right direction

We're all still excited about the new test servers because they're such an improvement. The job that used to take two hours now takes 20 minutes (including a full repository checkout). As of today I've got 15 of them running, and I have room to grow. Once we exceed the capacity of the one host server, I'm not sure how I'll adapt the system. That may be the moment to jump onto the OpenStack wagon (which is where I nearly started).

On the whole it was a lot of fun, and I learned a lot. I'd be interested to hear how others addressed these problems as well, or suggestions on how to improve what I've got.

blog comments powered by Disqus